From Launch and Iterate to Prompt and Iterate

From weekend idea to working Chrome extension in 2 hours

"Launch and iterate" was the startup mantra for years. A team would build v0, launch it, get feedback from users, and then iterate based on this feedback to launch v1. The key message was to launch sooner rather than later so you could begin getting valuable feedback from customers.

This approach emerged because adding features was expensive, and it was easy to get sucked into a cycle of feature bloat where v0 would take a long time to ship. When it finally did ship after several months or even quarters, the team might find that it missed the mark and users didn't find the product valuable. This would be a costly mistake.

In the age of personal software, where you can build applications for yourself using AI, I find that "launch and iterate" has been replaced by "prompt and iterate." You prompt AI to build software to solve a specific problem that you have. Then you immediately test it (since you're the only user, you only need your feedback and it's instantaneous). Then you prompt AI to make changes to your software based on your testing. In this loop, the "launch" itself is almost a non-event. You're not spending any time on that at all. You're spending time instead on prompting and testing.

Building ScribbleTab

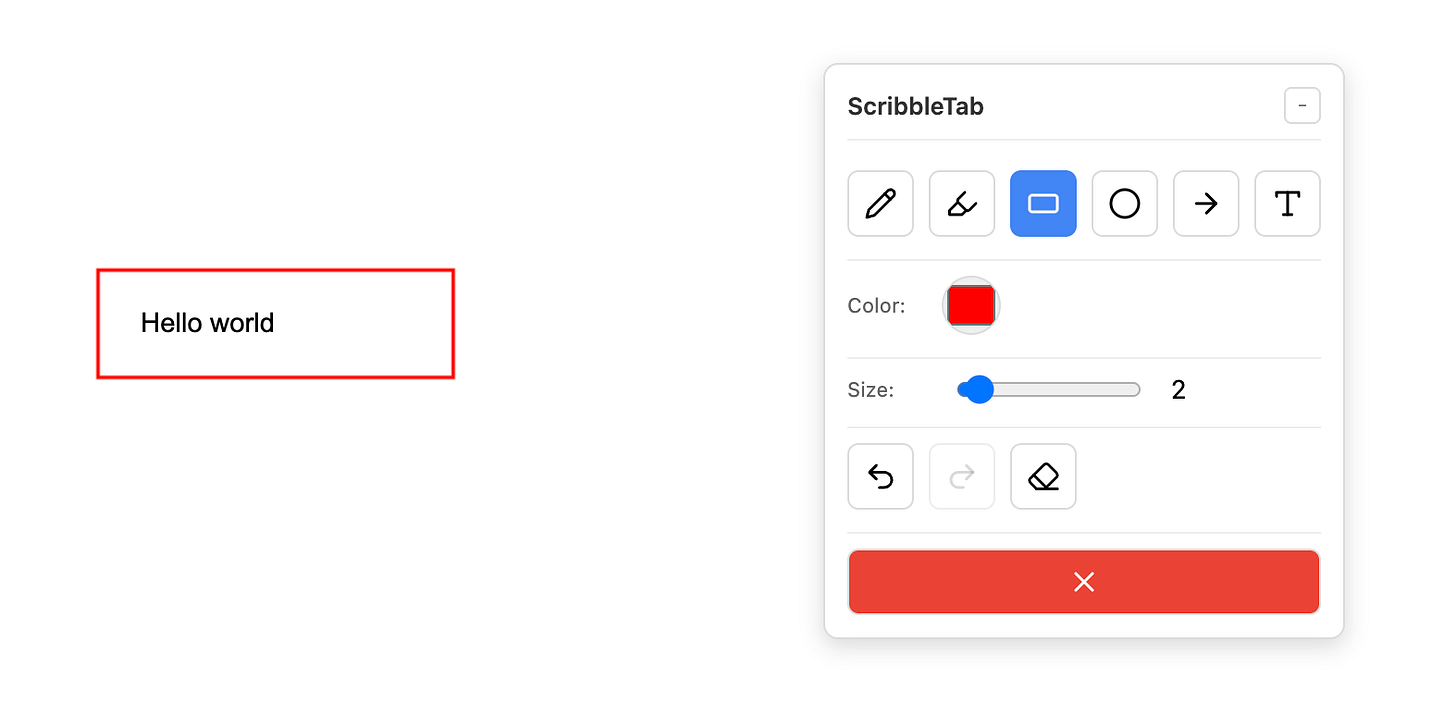

To show how this works in practice, let me walk you through building ScribbleTab, a Chrome extension to draw, highlight, and comment on any web page. There are existing Chrome extensions that do this, but I decided to build one tailored to my requirements. For example, I wanted to be able to move the toolbar, toggle its visibility using keyboard shortcuts, and implement undo/redo functionality.

Here's how I built it using the "prompt and iterate" cycle. Since the Claude 4 models dropped recently, I used this opportunity to test the Opus 4 and Sonnet 4 models. I used the larger Opus model to write the spec for the extension and then used Sonnet to implement it.

Starting with the Spec

My first prompt to Opus was straightforward:

Create a Chrome extension that will let me draw on the screen, select/annotate shapes to showcase UI elements, draw text, etc. It should work on a layer above what's on the screen. Use Manifest V3.

Write the spec for this, but do not code.

Here's the detailed spec that Opus wrote:

Chrome Extension Specification: Web Annotator

Overview

A Chrome extension that provides an overlay drawing and annotation layer on any web page, allowing users to draw, highlight UI elements, add text annotations, and create visual guides directly on top of web content.

Core Features

Drawing Tools

Freehand Drawing: Pen/pencil tool with variable thickness and color options

Shapes: Rectangle, circle, arrow, line tools for structured annotations

Highlighter: Semi-transparent marker for emphasizing content

Eraser: Remove individual annotations or clear all

Text Annotations

Text Boxes: Click to place resizable text boxes anywhere on screen

Font Options: Size, color, basic formatting (bold, italic)

Callout Bubbles: Speech bubble-style annotations with connecting arrows

UI Element Selection

Smart Selection: Hover to highlight DOM elements with visual feedback

Element Outlining: Draw attention boxes around selected elements

Element Information: Optional tooltip showing element type, class, or ID

Layer Management

Overlay Canvas: Transparent layer that sits above all page content

Z-index Management: Ensure annotations stay on top of page elements

Scroll Synchronization: Annotations move with page scroll

Viewport Persistence: Maintain relative positions during window resize

Controls & Interface

Floating Toolbar: Draggable control panel with tool selection

Keyboard Shortcuts: Quick access to common tools (e.g., Ctrl+Z for undo)

Color Picker: Preset colors plus custom color selection

Tool Options Panel: Context-sensitive settings for selected tool

Technical Architecture

Manifest V3 Structure

Service Worker: Background script for extension lifecycle management

Content Script: Injected into web pages to create annotation layer

Action Popup: Quick access menu for enabling/disabling annotations

Options Page: Detailed settings and preferences

Permissions Required

activeTab: Access to current tab for content script injection

storage: Save user preferences and annotation data

scripting: Inject content scripts dynamically

Data Storage

Local Storage: User preferences, tool settings, color history

Session Storage: Temporary annotation data per tab

Export Options: Save annotations as image (PNG) or data (JSON)

User Workflow

Activation

Click extension icon or use keyboard shortcut

Overlay appears with floating toolbar

Page content remains interactive below annotation layer

Drawing Process

Select tool from toolbar

Configure tool options (color, size, etc.)

Click/drag on page to create annotations

Use selection tool to modify existing annotations

Deactivation

Click extension icon again or use escape key

Option to save or discard current annotations

Overlay disappears, returning normal page interaction

The Iteration Process

I was happy with the spec Opus wrote, so I switched to Sonnet 4 for the actual implementation. Within 10 minutes, I had a v0 of the extension installed in my browser. Then I began a series of test→prompt→test cycles that looked like this:

Iteration 1: Claude's initial implementation worked well for drawing tools, but text annotations weren't working. Also, I realized I could not minimize or hide the toolbar and it was in my way. I told Claude the text tool was broken and that I needed to hide the toolbar without losing annotations. Within minutes, Claude fixed the text positioning logic and made it possible to toggle the toolbar's visibility.

Iteration 2: After more testing on different web pages, I realized the toolbar was stuck in one position and just being able to toggle its visibility wasn't flexible enough. I wanted to make the toolbar movable. I asked Claude to implement this and soon I had this working flawlessly.

Iteration 3: Further testing revealed that the undo system was broken. Clicking Undo would delete all annotations. I prompted Claude to fix this and also asked for redo functionality to be added.

Iteration 4: My extension was "feature complete" at this point, so I decided to improve its look and feel. Claude had used various emojis for toolbar icons and these looked hideous, so I bluntly told Claude the icons sucked and asked it to replace every icon with crisp, professional SVG designs.

Iteration 5: Claude replaced emojis with beautiful new SVG icons. However, this change caused tool selection to become janky and unreliable. I turned on extended thinking mode and asked Claude to fix this issue while keeping the new icons.

The Result

I finally arrived at a product I was happy with. The extension did exactly what I needed: it allows me to quickly annotate web pages with drawings and text, has the keyboard shortcuts I wanted, and a draggable toolbar that stays out of my way.

You can view the code on my GitHub.

I even created a banner image and an icon for Scribble Tab using ChatGPT's image generation feature.

Reflections on Prompt and Iterate

This experience once again reinforced how fundamentally different building personal software has become. When you're building for yourself, you already know exactly what you want, you just need the time and skills to build it.

The prompt-and-iterate approach lets you focus entirely on the problem-solving and refinement process, rather than the mechanics of coding. Each iteration cycle was minutes rather than hours, and I could test immediately since I was the only user that mattered. It's the significant advancements in the coding capabilities of AI models like Claude 4 and Gemini 2.5 pro that make this rapid, personal development cycle a reality.

In total, it took me just over 2 hours from start to finish. Without the help of AI, it would have easily taken me weeks to build this extension from scratch. In fact, I would not even have attempted to build it because I couldn't have afforded to spend so much time on it. That's the other huge benefit of AI: it makes the impossible suddenly feasible.

Have you used AI for personal projects in a similar way? What are your experiences with 'prompt and iterate'?